Brilliant Machines

Sure, robots are cool. Maryland researchers are making them smart

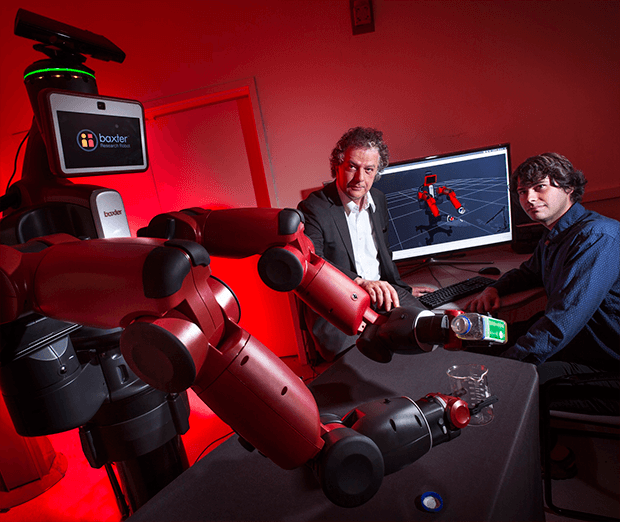

by Chris Carroll | photo by John T. ConsoliThe hulking black and red robot carries out its tasks like a distracted 3-year-old helping out in the kitchen. It’s finally able to grasp a box of tomatoes and dump it awkwardly into a bowl, but not without accidentally squishing one.

Compared to the powerful, precise industrial robots found on production lines worldwide, it doesn’t look very impressive.

So why all the excitement in the robotics world over this demonstration in the Autonomy Robotics Cognition (ARC) Lab, jointly run by the Institute for Systems Research and the University of Maryland Institute for Advanced Computer Studies?

Because the shaky salad-making attempt isn’t the simple, scripted performance of an industrial robot, which a technician could preprogram to dump tomatoes quickly and smoothly.

If you wanted the factory robot to add other ingredients, you’d need a program for each one. And make sure they’re always in the appointed places, because this robot lacks smarts to look for things. Need it to slice a cucumber? Get ready for a programming nightmare.

A very different approach is needed if we’re ever going to have broadly useful robots, says Yiannis Aloimonos, a computer science professor.

“We’re trying to build the next generation of robots,” he says. “These are robots that can interact with people naturally and do a variety of useful things.”

He and his colleagues in the ARC Lab are devising revolutionary ways for robots to teach themselves useful things, avoiding arduous programming for every task. Ultimately, they hope to impart something similar to the situational awareness—even common sense—that humans use to get things done.

“We’re trying to really go after true autonomy,” says John Baras, professor of electrical and computer engineeringand founding director of the lab. “Nothing is preprogrammed. It has to be able to react to whatever it finds in the environment.”

They’re starting in the kitchen. The cooking robot learned its rudimentary culinary skills from analyzing dozens of YouTube cooking videos. The feat was presented in a groundbreaking paper by Aloimonos, research scientist Cornelia Fermüller and computer science students Yezhou Yang Ph.D ’15 and Yi Li Ph.D. ’11.

At first, they tried having the system simply ape the motions of people in videos, but that resulted in robots unable to do much of anything.

“We discovered what we needed to imitate was not the movement—it was the goals that existed within an activity that had to be imitated,” Aloimonos says.

So they devised a vision system able to recognize important objects and actions within the video, breaking those down into “meaningful chunks,” he says.

To pick up the tomatoes, the robot analyzes how a YouTube cook grasps a similar container, and decides what would be its own most effective grip, and only then lifts the container. From there, the robot strings together known objects and observed actions into completed tasks. Or that’s the plan.

Even if the lab’s robots learn to successfully imitate tasks this way, they’ll still need to get smarter. That’s where Don Perlis comes in. The computer science professor specializes in an area of artificial intelligence known as commonsense reasoning.

Perlis says he realized the limits of his systems just as the computer vision specialists, Aloimonos and Fermüller, realized the limits of theirs.

“They were saying, ‘We need to add reasoning into our vision systems,’” he recalls, “and we said we need to add perception into our reasoning systems.”

Common sense—the ability to deal successfully with things that are uncertain and ill-defined—will be a key attribute for useful robots, Perlis says.

There are plenty of so-called expert systems programmed to do one thing well, like win at chess or prospect for minerals. But when the subjects are vague or poorly understood—how humans process speech, for example—artificial intelligence falters.

Perlis and his group are focusing on how computers deal with mistakes and unexpected changes.

“That view says the world is so complicated there’s not going to be a perfect set of axioms to get everything right,” he says. “You may have figured out everything yesterday, but today something will be different.”

Imagine you have a helper robot you order to bring a book from a library at the other end of the building, he says. You expect it to find its way to the library and locate the book.

But what if a shelf has fallen? A robot lacking common sense could look through the entire pile while you wait impatiently in your office. A more shrewd robot might realize the task can’t be completed on time and send you an email. With no other assignment, it would even clean up the books, Perlis says.

To be completely functional, robots, like people, need flexibility, he says.

“They’re not going to do everything perfectly, and humans don’t either, “ he says. “But we’d like them to muddle through and get things done.”

0 Comments

Leave a Reply

* indicates a required field